Using point tracking to analyze animal behavior.

Hackers: Anushmita Dey, Laura Nino, Lauren Rabe, Janielle Jackson, and Rhea Kapadia. Graduate Students: Namitha Padmanabhan and Pulkit Kumar.

Hacker

GradIO + SAM (Segment Anything Model) + CoTracker + Google Colab

2024

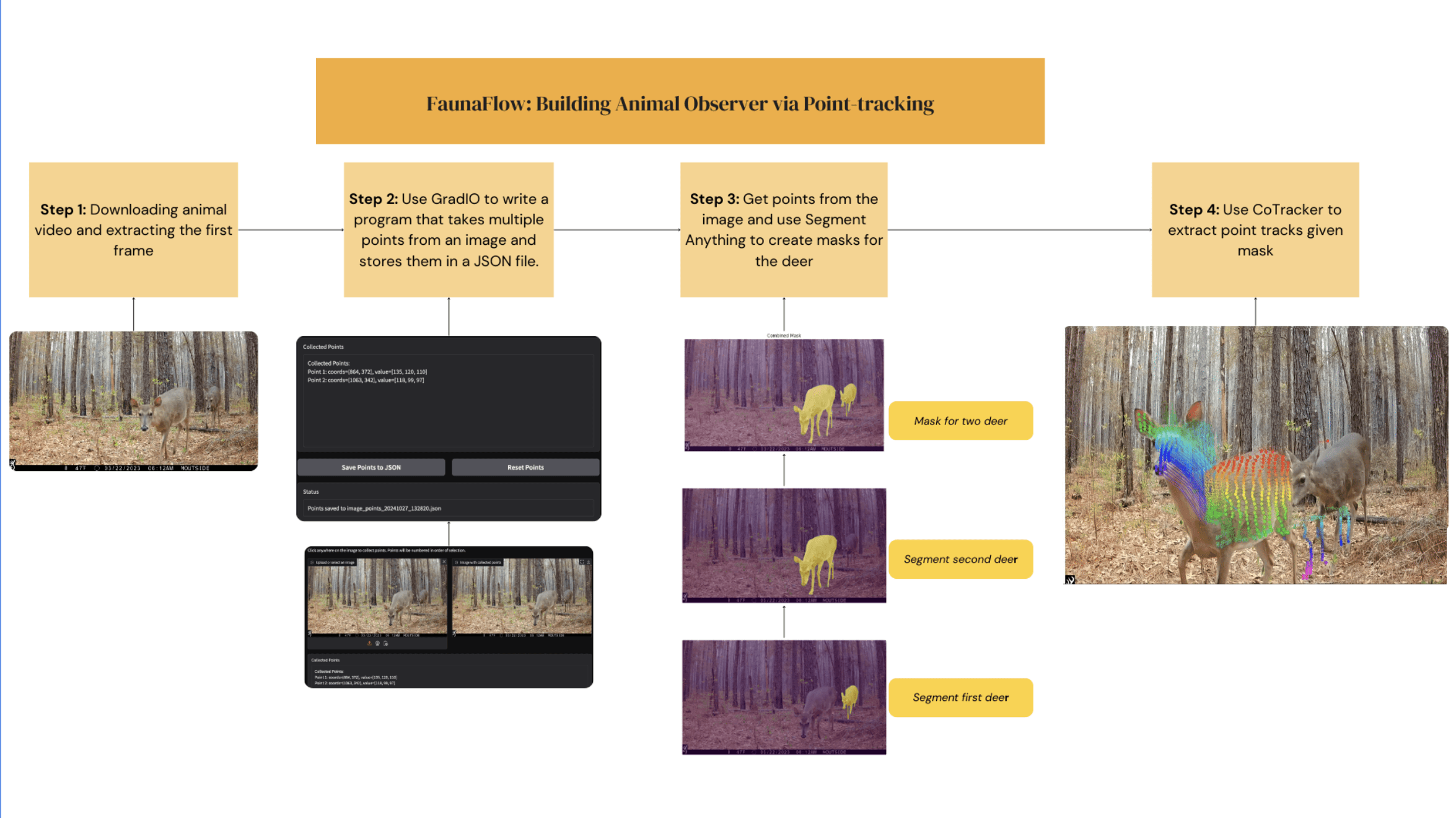

Process

The initial step involved searching for animal videos featuring clear movement captured by a stationary camera. These videos were then broken down into individual image frames. Using the first frame from each video, masks were created to isolate individual animals. These masks were generated using SAM (Segment Anything Model), which can create precise masks from just a single point selected within the animal's body. In cases where multiple animals were present, their individual masks were combined into a single mask.

The second part of the process, led by Anushmita and me, begins by loading the first frame and overlaying it with the corresponding mask(s) to ensure precise pixel alignment. We utilized the CoTracker model as our foundational software for point tracking. The videos, converted into PyTorch tensors, are fed into the model alongside their masks. The final output produces a colorful visualization that effectively tracks and displays the animals' movements throughout the video.

Takeaway

The main challenges faced in this hackathon was navigating the technology. We spent a majority of our time working with GPU usage limits, loading videos, and using the collaboration feature for Google Colab. Here's a run down of of some problems and solutions.

We had many workarounds to address out GPU usage limits, such as using different emails to optimize our resources and obtaining credits for Google Colab. However, it proved to consume a lot of our time.

We were given the barebones demo of the CoTracker model that we used for point tracking, the new videos we inputted had different frames per seconds, we had to ensure that these specific details lined up with our code and videos.

As you can see in the GIF of the deer, the points do not accurately capture the movement of it's legs. Given more time, I am confident that my team and I would be able to correct this issue.